Triangulation.. Finally! Want it so bad for a semester. Luckily having a winter break to do it. Have a lot of rooms for improvement but still, one step further!

*UPDATE*(in the middle of writing this post UGH)

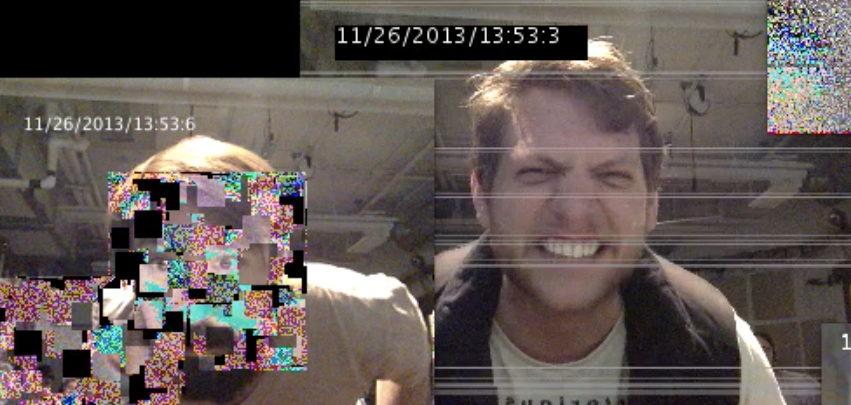

New version. Normal speed!

In the middle of documentation, the ill performance, both low speed running and wrong color picking bothered me a lot, so I went through the whole codes again and making adjustment around. And then I found out what the problem is! It’s the random plotting of points for triangles! It not only slows down the process, but also causes the ill performance of choosing the right color for the triangle. OH YEAH SO HAPPY. It’s the similar “bitter yet sweet moment” I usually will have when coding… I think I’m ready for the new semester!

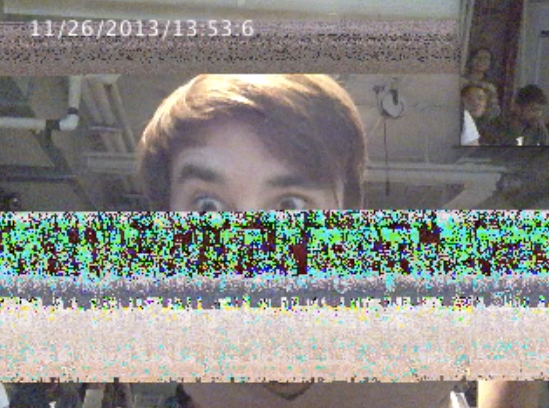

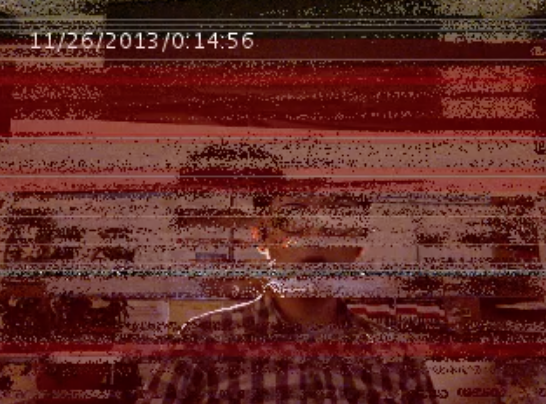

Old version. x3 speed! Slow and chaos.

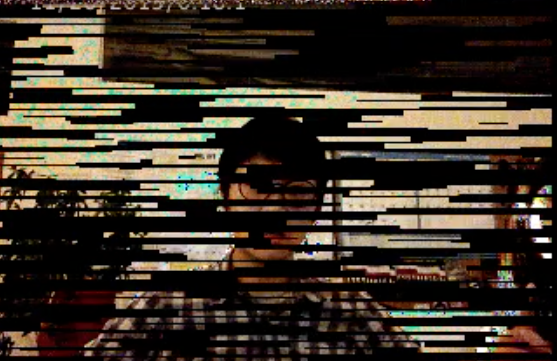

Old Line version. Slow but it seems to have better outcome with the random plotting points methods!!

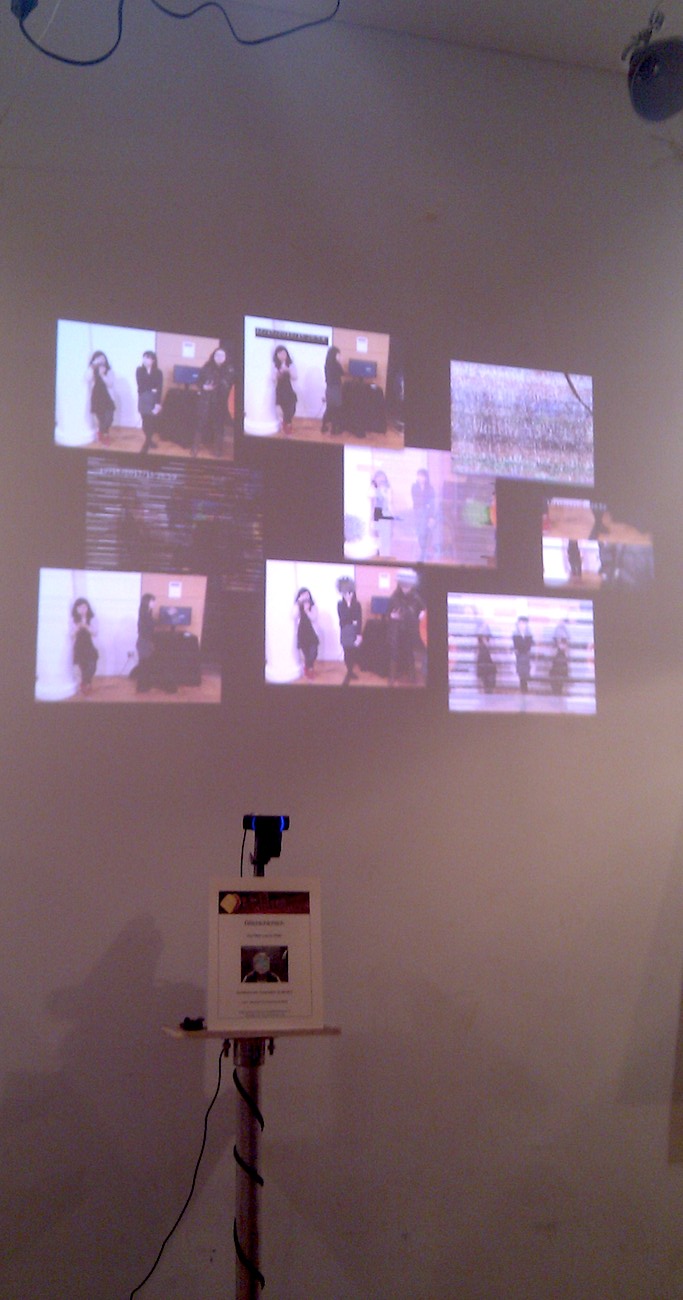

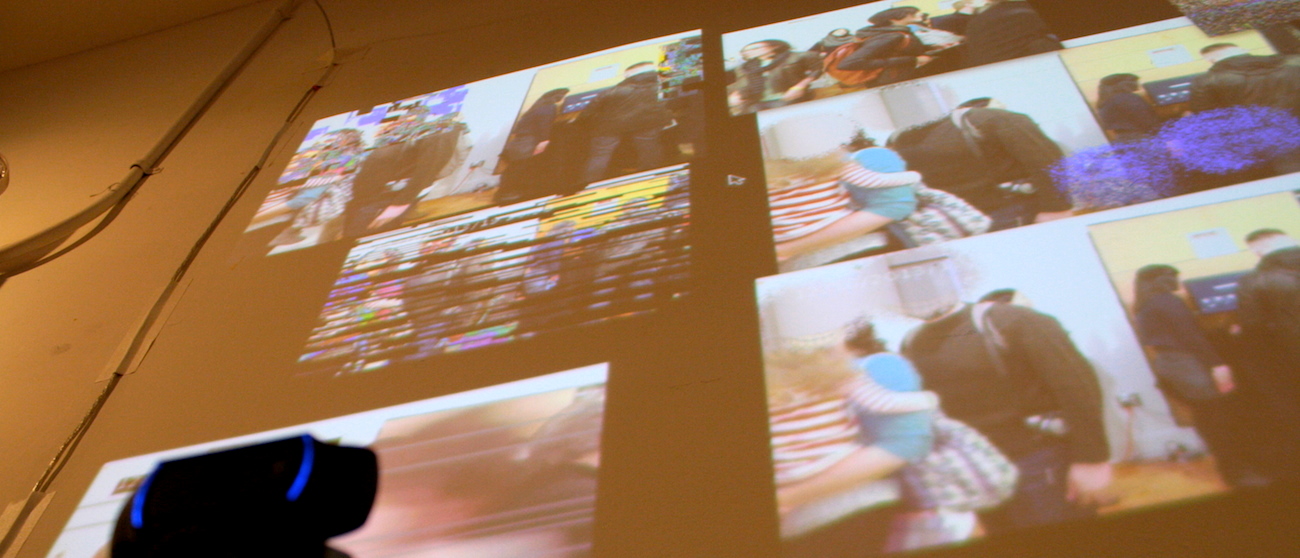

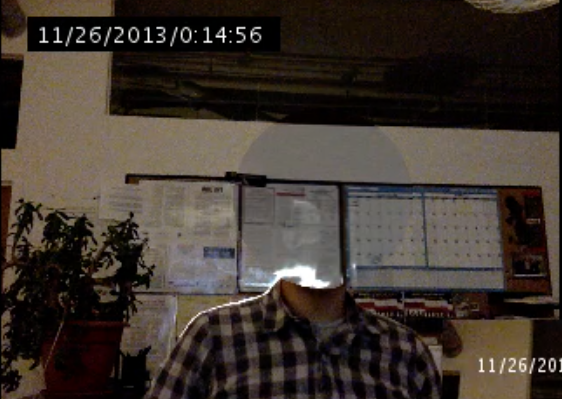

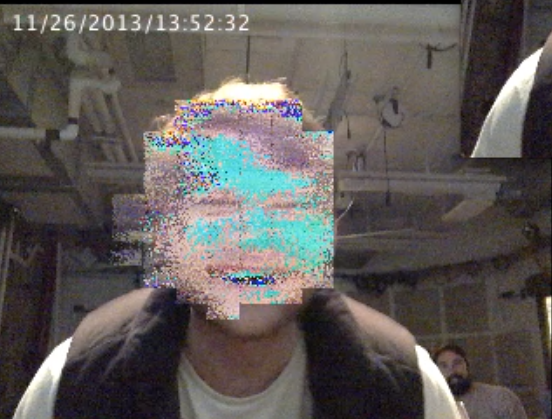

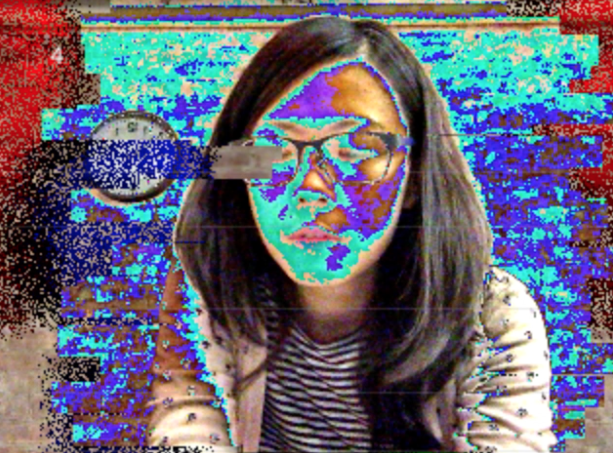

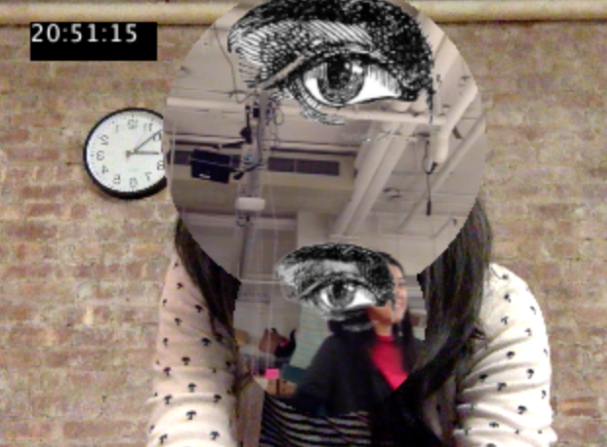

Image Gallery

Basic idea of code

- capture image from webcam and save as PImage source

- iterate through source, pick up every pixel, compare either a) colors or b) brightness difference(I found comparing by colors will be more accurate).

- if the difference is bigger than certain threshold, plant a point in class Voronoi. Voronoi does all the calculation to transform points into triangles.

- for each triangle gotten out from Voronoi, using getCentroid() to pickup the color to fill the triangle built up with beginShape(), endShape() and vertex().

- wipe out Voronoi and build a new one every 10 frames to speed up the performance.

References

- from Robbie Tilton. ITP alumnus! This is where my base came from! Clear description made me not afraid of trying the code out! From him, I got the idea of using Cols and Rows to boost up the performance, and plotting points for triangles with a random deviation of 5 pixels to make it look less grid-like(BUT randomness is not good for picking color, and it also slows down the performance).

- from Jan Vantomme. Very well documentation! From him, I learned the difference between getCentroid() and getSites(), and also learned that, since getCentroid() and getSites() don’t return the voronoi regions and points in same order, to fill the right color for right position it has to be looped to iterate through, picking and filling up the color at the same time.

Inspiration & Further

- amazing algorithmic portraits by Diana Lange.

- triangulation tools done by Bezalel workshop, so complicated yet the outcomes are so gorgeous.

- hemesh –> being able to make 3D triangulation mesh(printable too)

Code, as below: Continue reading